Realizing Prokudin-Gorskii's Dream

posted by dJsLiM on Wednesday, September 14, 2005 ::

click for class reference material ::

Soooo... We had our first assignment. You can read more about it here. The gist of the assignment was that you'd be given 3 gray scale photographs of the same scene, where each photograph had been taken through blue, green and red filters respectively. You're required to take that as your source and produce a colorized composite.

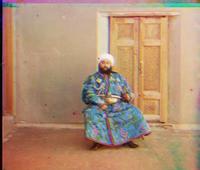

So here is a sample image:

As you can see, it contains 3 photographs of the same scene where the top one represented the blue channel, the next green, and the last red.

So I thought, ok that seems simple enough, I'll just cut the long image into 3 equally sized chunks, take the gray scale value from the top one, use it as the value for "B" in the (R, G, B) triplet of the final color composite, rinse, lather, and repeat for "G" and "R".

After about 10 lines of matlab code, I was able to produce the following composite:

Man, doesn't it take you back to the good ol' days of staring into those nutty images out of them 3D books with the cheapo 3D glasses? Awwwww.... Hours and hours of fun! ^^

Well, so the first obvious challenge here was to align the three images up so that they're correctly superimposed. The assignment suggested that we try a couple different metrics that, given two images, can give us an idea of how close thay match up. The first approach was to calculate the sum of squared differences or SSD. You might think it sounds fancy, but once you start taking the name literally, it becoms quite clear that it's dead simple. What you do is you take the differences in values at a given point on the two images, square it to get a positive value, then just add'em all up. If the number is low, that means there's not much difference between the two, and if the number is high, well... you get the idea.

The second method was to calculate the normalized cross-correlation value or NCC. As you may already know, when we talk about measuring the correlation between two given patterns (an image being just a pattern of color values), what we're trying to find out is how closely the two "co-vary". In other words, we're trying to find out how similar of a pattern the two are. This time, the higher the value, the more similar they would be.

So there you have'em, SSD and NCC. Now, how can we use these measurements to find out the correct way to superimpose the 3 images? Well, the simplest way would be to keep one image in place, superimpose another one, calculate either the NCC or the SSD value, slide it a lil bit, calculate the values again, etc... until you find yourself a decent SSD or NCC value that you're happy with. The amount by

With this new feature implemented, the script shifted the green channel by 0, 6 and the red by 0, 13 before superimposing the two on top of the blue channel. The result seemed decent.

Now, do you notice how this image lacks the black border found in the original? Well, the clump of blackness messed up my calculations, so I chopped them off before aligning them up. The above photograph was particularly tricky because there were parts toward the bottom of one of the pictures that kept skewing the result. I could have probably spent more time finding a better area for comparison, or devised an automated approach such as edge detection to discard the portions of the channels where there would have been too strong of a match (i.e. clump of blackness compared to clump of blackness). Well, maybe, next time. ;)

So, is that it, you ask. Well, that woulda been suh-weeeeet, cuz at about this point I've had grown somewhat of an allergic reaction to matlab's quirky syntax. ;) So, no, it wasn't over, yet.

The second challenge came in the form of performance optimization. Calculating the SSD or NCC values over a 15x15 displacement window isn't horrible. Throw a P4 at it, and you'll get the images aligned in no time. Now, things get funky when we need to align images that are bigger, like... a lot bigger. The main issue would be

Anyway, the idea behind the Gaussian pyramid is that, instead of calculating the SSD or NCC values on the full image, we can calculate them on a scaled down version of the image. The image would obviously have to be small enough so that we can be happy with a measley 15x15 displacment window. When we get the SSD or NCC value on the scaled down image, we can first shift the image by that amount, scale the image back up a notch, rinse, lather repeat until we're back to our full resoltion image. So you see, the whole point is that since we're progressively shifting the image, the use of 15x15 displacment window can be good enough throughout the process, and oooooh yes, it runs much faster. ;)

With all this done, I was curious to try and see if I can run my script through my very own RGB filtered photos. So I ripped some transparent filing labels off of my folders (yeah, I'm that cheap) and got down and dirty. ;) Here's what I ended up with

Here's the post-processed image:

Now, it's pretty obvious the result sucks royal ass... Hmmph! I blame it on my sucky filters... =P In an attempt to try and see if I can make it any better, I implemented a simple white balancing script to get the following image:

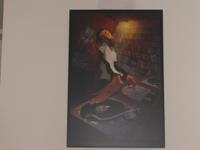

Hmm... I suppose that's at least recognizable... ^^; Here is what it was supposed to look like:

So that's the end of the first assignment! If you're interested in taking a look at all the photographs that I have processed using my script, head over here. I just hope Prokundin-Gorksii can rest in peace now! :)

3 Comments:

hi. is there any way to see the code behind this project? it would be very helpful. thanks

please please reply to pedra89@gmail.com

Hi Simone! Wow, I almost forgot I had written this blog post. It's been several years! :) Unfortunately I don't think I have the code anymore... Or if I do, I can't find it right now. But the idea is very simple. Since color values are encoded in RGB each with 8 bits, you simply map the 8 bit greyscale values of each of the three greyscale images to the R, the G, the B respectively. Hope that helps!

Hi. I am confused about the implementation of the ssd. Can you please tell me, once you slide up the G color channel within the [15 15] window, do you calculate the ssd with the entire images or a part of it? if we have to calculate ssd with the whole images, then isnt it a very slow one? It would be really very helpful if you could clarify my doubt. Thanks!

Post a Comment

<< Home